AI-generated document fraud is becoming one of the most pressing challenges for businesses across industries. The rise of generative AI technologies, including large language models (LLMs) like ChatGPT and image diffusion models, has created new opportunities for bad actors to produce fake documents that look remarkably authentic.

These tools can generate fraudulent bank statements, ID cards, insurance claims, and other sensitive documents in minutes — all without any prior technical expertise, claims Resistant AI.

Traditionally, document fraud required time, skill, and access to specialist editing tools. Now, the accessibility of AI-driven platforms has lowered the barrier to entry. Generative AI allows even non-technical users to fabricate convincing forgeries by typing a few simple prompts. A recent poll from the “ThreatGPT” webinar, which surveyed hundreds of fraud professionals across sectors such as banking, insurance, and lending, found that 40% had already encountered AI-generated documents, while 39% were unsure.

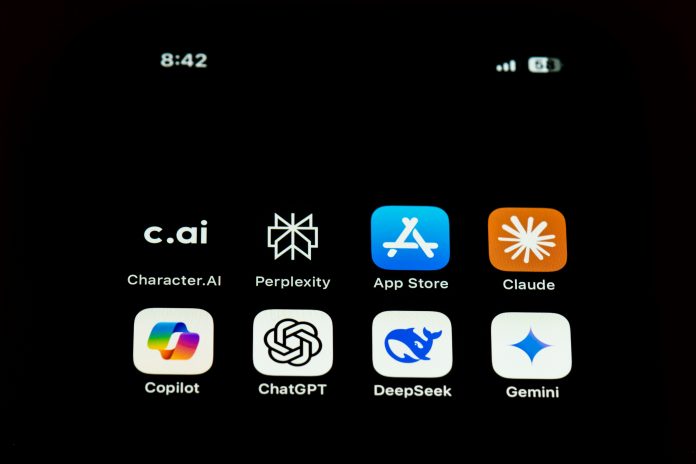

The unprecedented growth of AI tools has played a major role in this surge. Platforms like ChatGPT, Gemini, and Meta AI collectively have more than a billion users. Even if a fraction of them experiment with fraudulent use cases, the potential number of fake documents circulating online becomes staggering. While these systems include content safeguards, they can often be bypassed by slightly rephrasing prompts. Simply omitting the word “fake” can be enough to fool the model into generating realistic fraudulent material.

Generative AI has effectively filled the “opportunity” corner of the traditional fraud triangle, which also includes pressure and rationalisation. With tools this powerful and easily available, the temptation for financially desperate individuals to commit fraud has never been higher. Businesses, particularly those involved in lending, claims processing, and payment services, are already seeing the results — with fraud teams overwhelmed by the complexity and volume of AI-generated submissions.

For firms that interact directly with consumers, first-party fraud poses a significant challenge. These are often one-off attempts by individuals using their real identities to submit doctored or AI-generated documents. While around 80% of such cases can be identified through metadata checks, more sophisticated users can easily erase these traces, for instance by converting file formats. This leaves fraud detection teams with little contextual data to flag suspicious activity — a phenomenon experts are calling “zero-day fraud.”

Third-party or organised fraud networks, on the other hand, are now using AI to streamline their operations. Fraud rings can generate hundreds of document variations instantly, adjusting details such as lighting, texture, and background to evade detection. Many combine AI-generated forgeries with legitimate templates obtained illegally, producing high-quality fakes that require advanced verification techniques to uncover.

To counter these risks, financial institutions, insurers, and payment firms must invest in next-generation fraud prevention technologies capable of authenticating documents directly rather than relying solely on contextual or behavioural cues. As the sophistication of AI-driven deception grows, the fight against synthetic document fraud will demand equally advanced detection capabilities powered by AI itself.

Copyright © 2025 RegTech Analyst

Copyright © 2018 RegTech Analyst